Where The World Is Heading

Let’s take a brief moment and take a look at one of the hottest topics in Information Technology today: The Cloud.

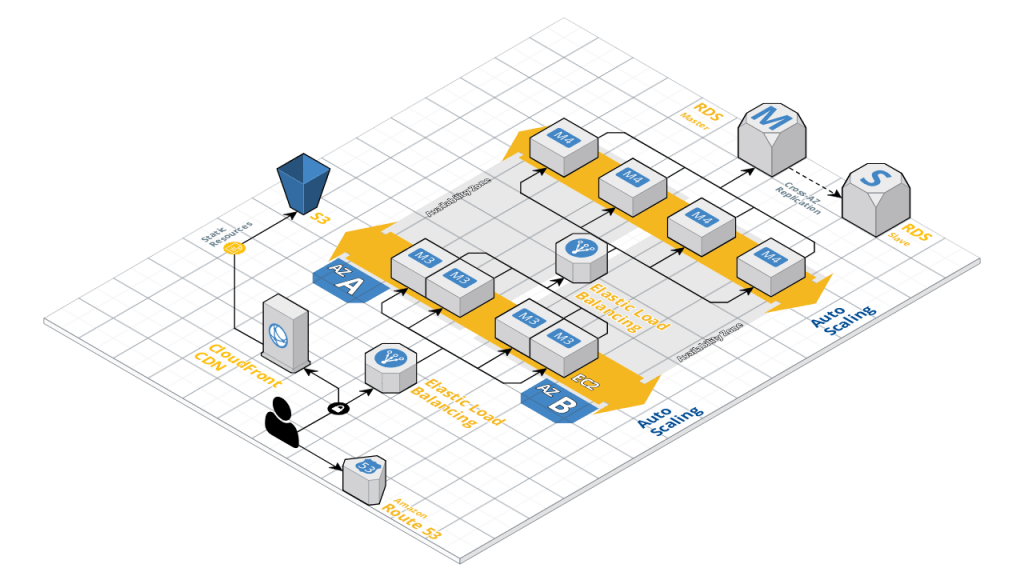

The Cloud is many a splendored thing. It is a source of infinite resources. It is a cost saving panacea. It promises to reduce overhead. It uses python, which is way more modern than perl. There is a ton of hype around it. But why? Let’s take a look at a typical web services diagram.

(Note: I am going to be using Amazon Web Services terminology here, but the concepts are the same for any major cloud provider such as Azure or Google Cloud)

There is a lot going on in this diagram, and if you are not used to Cloud (specifically, AWS) terminology, it can be confusing. Let me break down the salient points here:

Load Balancing

To the end user, there is one entry point into this web service. That’s all they care about, that’s all they know about. But there is a dizzying amount of resources behind that one entry point. See all the things labelled M3 and M4? Those are compute instances. Web servers or application services. And there’s two layers of load balancers to boot. The first layer insulates the end user from failure in an individual web server instance. Or maybe one server is just getting overloaded with requests. The load balancer redirects traffic in the background to the server with the lowest load. The second layer actually insulates the web server from failures in the application layer instances. Amazon’s mantra for design is “Design for failure, and nothing will fail”. They explicitly embrace failure and give you the architectural elements to create architectures that are truly “self healing”.

Fault Tolerance

Notice the two blocks labelled “Availability Zone”? In AWS parlance, an Availability Zone (AZ) is a separate physical datacenter. Here, you’ve got load balancers that are not only moving traffic to the least utilized server, but those servers are in two completely different facilities. The load balancer itself is what Amazon calls a “managed service”. That is to say, they handle keeping it up and alive and highly available. If you were to look “inside” that icon, there’s probably a whole set of hardware and software spread across AZ’s itself, but you don’t have to worry about that part of it. You just consume the service.

Auto Scaling

Those web server instances (much like compute instances) are individually disposable. That is to say, every web server looks just like every other web server. You stand it up, throw workload at it and it provides some answer back. In AWS terms, there is a machine image which is a template you can create which defines the basic set up of a web server instance. Let’s say that your load balancers do a health check on your web tier and find that all of them are above the threshold you’ve set in terms of CPU utilization. No problem. The service simply auto provisions a new server based on your rules and deploys the service and adds it to the load balancer. Now maybe your spike in usage is over. The load balancers see that all of your web servers are at low utilization, so it scales back down to what you’ve configured as the minimum number of web instances you need online at a time.

Network Segmentation

See those instances on the right labeled “RDS”? RDS is Amazon’s Relational Database Service (MySQL, PostGRES, Oracle, etc..). Those database services are tucked away outside of direct access of end users. And they probably have firewall rules (called Security Groups) that ensure that only traffic from subnets containing application servers can talk to them directly.

Now Let's Compare To The Typical HPC Setup

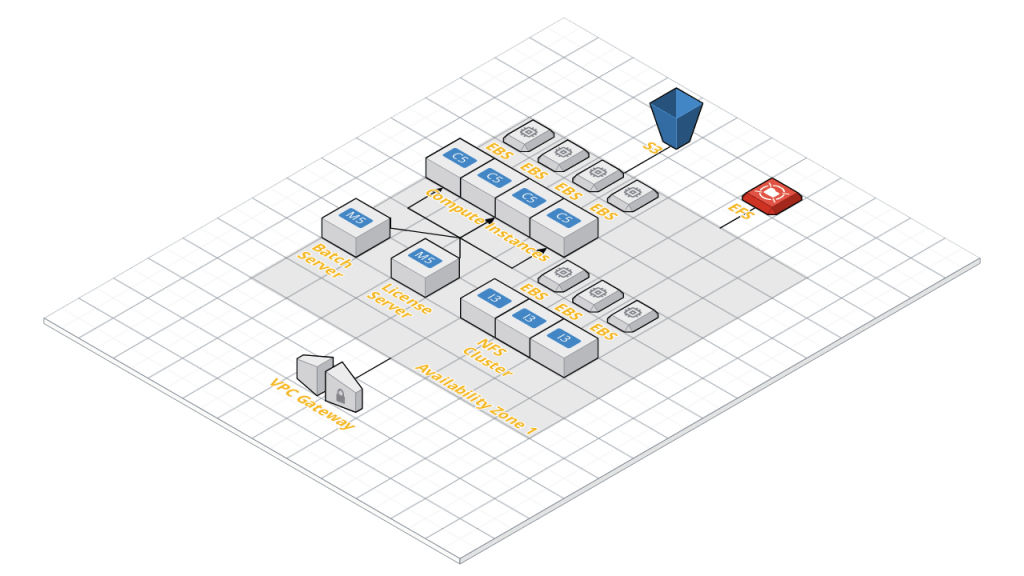

Here’s a generic architecture diagram for translating EDA HPC into the Cloud.

This one should look a little more familiar. We’ve got our batch scheduler, a license server, some compute nodes and some storage out there. This is a direct translation of the high level architecture diagram most of us are used to. Let’s walk through our earlier analysis and see how this compares to modern web architecture.

Load Balancing

The only “load balancer” we have in this scenario is our Batch Scheduler. Too much traffic going to your NFS server? No different than when that happens on-prem today. Same for everything else.

Fault Tolerance

Compute

Compute servers going down is not catastrophic, unless you’re running a multi-day signoff job. In which case, you can expect what you’d normally expect for losing a multi-day job.

Licensing

The single license server going down would be a problem. Now, there IS a way to have fault tolerant license servers, but if you’ve read my Wish List for FNP, you’ll know that I don’t like the answer. It’s using license triads across availability zones.

Storage

There are three types of storage on this diagram.

- S3, which is Amazon’s Simple Storage Service. S3 is what is known as object storage, and again every major cloud provider has a version of this. It is a managed service with all of the complexity of normal filesystems pared down to a simple RESTful interface.

- EFS, Amazon’s Elastic File System. EFS is their managed NFS service (supporting NFS 4.1 and as of April 5, 2018, TLS encryption). Azure now offers NFS, though Google Cloud does not have a managed offering as of this writing.

- An NFS Cluster. There are several high performance in-Cloud NFS file services out there, such as Avere, Weka.io, Pure Storage, Elastifile, Network Appliance, and even IC Manage. These services promise higher read/write performance scaled across thousands of compute instances. Or if you prefer, you can “roll your own” NFS service using compute instances and block-based storage.

For the first two, S3 and EFS, you have a tremendous amount of durability. Amazon publishes 99.999999999% durability for objects in S3. They do not post a durability number for EFS, but since the data is written across multiple AZ’s, we’ll assume it’s high. Now, I have S3 on this diagram because you’re probably going to have things like bootstrap scripts or other artifacts you want available in a highly available way. But in today’s world, EDA tools can’t talk directly to object storage. If you want to use it, you have to do some copying of data onto a POSIX filesystem and then copy back. Realistically, not many places are going to make heavy use of object storage unless they are buying a third party NFS front-end which does the translation for them.

EFS looks great on paper. It’s got a ton going for it. It’s a managed service, it’s “infinitely scalable”. You don’t have to worry about provisioning a particular size — it just grows to your needs. It now supports NFS v4.1 with TLS encryption in transit, which is way cool. Your data lives in multiple AZ’s. However, its performance is based on how much storage you are consuming. This leads some places to put large, dummy files out there just to get the performance they need. In addition, the multi-AZ writes can slow things down. I’ve heard through the grapevine that read/write performance can’t keep up with EDA HPC workloads. So in all likelihood, you’d either not use EFS or you’d use it for read-only data like application data or static libraries.

Then we get to the NFS solution. If you decide to “roll your own”, you’re only as good as your storage architect and the amount of concurrency you throw at your data. You can roll out ZFS or Gluster or a few other solutions, but bottom line you are on the hook for that all the way. And anyone who’s been around for a while knows that it’s not only possible but probable that an NFS server will be overrun at some point by an overzealous engineer or team.

Now, if you go with a commercial solution, you’ve got a much better chance of handling high load. Many of the commercial solutions out there are doing truly innovative things with distributed storage. If you’re up to date with the HBO show Silicon Valley, you’ll think that you’re using the “New Internet”. Cloud architectures and automation capabilities give them unprecedented abilities to distribute your read/write operations across multiple nodes. But these solutions do not come cheap. Another somewhat common paradigm here is that in almost all cases, your main NFS service lives in just a single AZ. If you have an AZ-wide failure, you either have to re-replicate data from on-prem back out to the cloud (which could take hours or days) or you have to restore from an S3-based snapshot of some kind, potentially losing some amount of data depending on how often you snapshot. Oh, and creating new NFS clusters in these scenarios may or may not have all the automation you need for a new server to just come up without human intervention.

The long and the short of it is, you’re either going to roll your own solution and have to deal with “NFS Server not responding” messages (causing everything to come to a halt), or you’re going to invest in yet another storage platform. And if you have an AZ outage, regardless of the solution, you could be in for a world of pain while you remediate things manually.

Auto Scaling

Compute

Our Batch Scheduler is auto scaling compute for us. If you get fancy and use AWS Batch or if you have been able to containerize your workloads and can use ECS or EKS, then you’ve got the container manager to handle that for you. But odds are you’re going to be using a more traditional Batch Scheduler than a container orchestrator, at least in the short term.

Licensing

You can create autoscaling groups for license servers, though you must make sure you are using an Elastic Network Interface (ENI) to ensure that the MAC address of your license servers survive a termination event. If you don’t, you’ll get a new MAC address upon autoscale and render your existing licenses useless.

Storage

You could autoscale your home-grown NFS service, though if there is a problem inside a single AZ it may be for nought anyway. Elastic Block Store (EBS) volumes which would attach to your storage solution exist in a single AZ, so if you have to evacuate an AZ you need some kind of snapshot capability because you are going to have to restore from snapshot in your new AZ.

Third party NFS services are going to deploy a service for you, meaning that it’s very unlikely you can put them into an autoscale group. You’d definitely need a coder handy to put some form of automation in place, but I’m not aware offhand of a solution that is totally amenable to a completely automated way of achieving multi-AZ failover.

Network Segmentation

You certainly can have multiple AZ’s. However, let’s just say the that you put your data in AZ2 and your compute is in AZ1. Now, you’re paying the latency penalty for moving traffic between datacenters, which no one would ever do on-prem. And you are also paying for cross-AZ traffic, which is a very real monetary cost. So for the moment, for all practical concerns, EDA HPC architectures will tend to favor a single AZ deployment. This obviously limits our ability to have self-healing architectures since we’re dependent upon a single datacenter.

What Got Us Here Won't Get Us There

We’ve had a really good run. It’s been 30 years of a mostly static compute architecture. System administrators have had relatively stable paradigms to learn. Software developers have focused on how they can shrink geometries and not worry so much about complicated things like message passing or doing file I/O to non POSIX compliant filesystems. Flow developers have relied upon system and software architecture being predictable and the same year after year.

But let’s face some facts. Building and supporting data centers is an expensive endeavor. There’s facilities costs, power costs, personnel costs, rack costs, cabling costs, equipment costs. The momentum to move these long-range fixed capital costs to short term, controllable variable costs is not going to go away. At some point, you are going to go to your Executives and pitch a major datacenter build, remodel or refresh. And they are going to tell you to come back when you’ve done an analysis of running in the Cloud. And eventually, you’re going to have to find a way.

But as it is today, you’d be bringing all of your 30 years of 20th Century architecture onto a 21st Century Platform. Your headaches will stay the same and will be worse because you’ll be running on a new platform which you don’t know as well as your tried and true legacy systems. You won’t have the benefit of resiliency using things like load balancers and multi-AZ deployment paradigms because they’re expensive for your use case and they don’t make sense. Your Cloud provider will be telling you about all of these benefits to the Cloud but the results will be totally incongruous to your experience because you’ll be putting a square peg into a round hole.

We, as an industry, have to begin the process of embracing newer, modern architectures. Until we can decouple services from individual servers and utilize stateless transactional paradigms, we’ll be stuck with what we have today. An architecture with a practical scale-out limit, loads of chokepoints that can cause the whole system to seize up and an environment that is impossible to police from a data security standpoint. Even if the end goal isn’t to go to the Cloud, we have to find ways to modernize the underlying infrastructure to remove those choke points. In my next post, we’ll discuss some proposals on what we can embrace to have a path forward.